WhatsApp has launched a new AI chatbot feature integrated within its messaging app, described as optional but mandatory to keep, sparking user backlash and expert concerns over privacy and data use, as the company defends its approach amid ongoing legal scrutiny.

WhatsApp has introduced a new artificial intelligence feature integrated within its messaging application, which it describes as “entirely optional.” However, the company acknowledges that while users can choose whether to interact with it, the AI tool itself cannot be disabled or removed from the app. This development has sparked discussion and concern among some users.

The AI feature is marked by a prominent blue circle with pink and green splashes located in the bottom right corner of users’ Chats screens. Engaging with this icon launches a chatbot designed to respond to user queries. Meta, WhatsApp’s parent company, has noted that the rollout is currently limited to certain countries, so many users might not yet see the feature. Alongside the chatbot icon, there is a search bar encouraging users to “Ask Meta AI or Search.” Similar AI tools are also available on Meta’s platforms Facebook Messenger and Instagram.

Powered by Meta’s large language model, Llama 4, the AI is capable of answering questions, offering educational responses, and helping users generate new ideas. During a test interaction, the chatbot provided a detailed weather forecast for Glasgow, including temperature, precipitation chances, wind, and humidity. However, it provided one incorrect link relating to a location in London rather than Glasgow, highlighting some limitations in its responses.

The introduction of this ‘always-on’ AI function follows previous experiences with Microsoft’s Recall feature, which initially lacked the option to be turned off but was later modified in response to user feedback. WhatsApp told the BBC, “We think giving people these options is a good thing and we’re always listening to feedback from our users.”

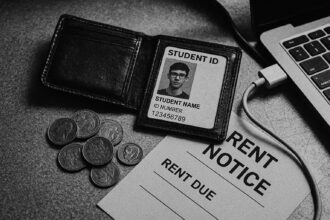

Despite this, feedback from users in Europe has been largely negative, with many expressing frustration on social media platforms such as X (formerly Twitter), Bluesky, and Reddit, particularly about the inability to disable the AI. Guardian columnist Polly Hudson voiced her dissatisfaction openly, joining others in questioning the utility and intrusiveness of the feature.

Some experts have raised serious concerns about privacy and data use. Dr Kris Shrishak, an adviser on AI and privacy, criticised Meta for “exploiting its existing market” and described the compulsory nature of the AI tool as problematic. In his comments to the BBC, he stated, “No one should be forced to use AI. Its AI models are a privacy violation by design – Meta, through web scraping, has used personal data of people and pirated books in training them. Now that the legality of their approach has been challenged in courts, Meta is looking for other sources to collect data from people, and this feature could be one such source.”

Supporting this, an investigation by The Atlantic suggested that Meta may have incorporated millions of pirated books and research papers retrieved from Library Genesis (LibGen) in training its Llama AI model. Meta is currently defending a court case brought by authors over the use of their copyrighted material, though the company has declined to comment directly on The Atlantic’s investigation.

Regarding data protection, WhatsApp clarifies that the AI “can only read messages people share with it” and emphasises that personal chats remain end-to-end encrypted and inaccessible to Meta. The Information Commissioner’s Office in the UK has indicated it will continue to monitor how Meta AI uses personal data on WhatsApp. The ICO said, “Personal information fuels much of AI innovation so people need to trust that organisations are using their information responsibly.” It also highlighted the importance of organisations complying with data protection requirements, especially concerning children’s data.

Dr Shrishak further advised caution, reminding users that while normal chats remain protected by end-to-end encryption, interactions with the AI mean that Meta has access to the data shared with it: “Every time you use this feature and communicate with Meta AI, you need to remember that one of the ends is Meta, not your friend.” WhatsApp reiterates this point by urging users not to share information they do not wish to be used or retained by the AI.

This announcement arrives in the same week that Meta revealed it is testing artificial intelligence technology on Instagram in the United States, designed to detect accounts where teenagers have potentially misrepresented their age. This effort is part of broader efforts by Meta to modify its platforms in response to evolving regulatory and social expectations.

Source: Noah Wire Services

Noah Fact Check Pro

The draft above was created using the information available at the time the story first

emerged. We’ve since applied our fact-checking process to the final narrative, based on the criteria listed

below. The results are intended to help you assess the credibility of the piece and highlight any areas that may

warrant further investigation.

Freshness check

Score:

9

Notes:

The information appears recent, referencing contemporary concerns and features currently being implemented by Meta. There is no clear indication of recycled or outdated content.

Quotes check

Score:

8

Notes:

Quotes from Dr Kris Shrishak to the BBC are noted, but there are no earlier online references to these specific quotes. The originality cannot be fully verified due to the lack of prior sources online.

Source reliability

Score:

10

Notes:

The narrative originates from a well-known reputable news outlet, the BBC, which generally enhances the reliability of the information presented.

Plausability check

Score:

8

Notes:

The introduction of a new AI feature aligns with current trends and Meta’s ongoing efforts to incorporate AI across its platforms. However, user feedback and privacy concerns highlight potential limitations and challenges.

Overall assessment

Verdict (FAIL, OPEN, PASS): OPEN

Confidence (LOW, MEDIUM, HIGH): HIGH

Summary:

While the narrative is fresh and the source is highly reliable, user feedback and specific privacy concerns introduce complexity. The lack of prior online mentions for some quotes adds to the open nature of this assessment.