Faced with demanding exhibition specs and tight deadlines, artists are increasingly using frame‑interpolation tools as a last‑mile fix — embracing hybrid workflows that smooth motion while studios, unions and audiences debate the ethics, labour impacts and risks of stylistic dilution.

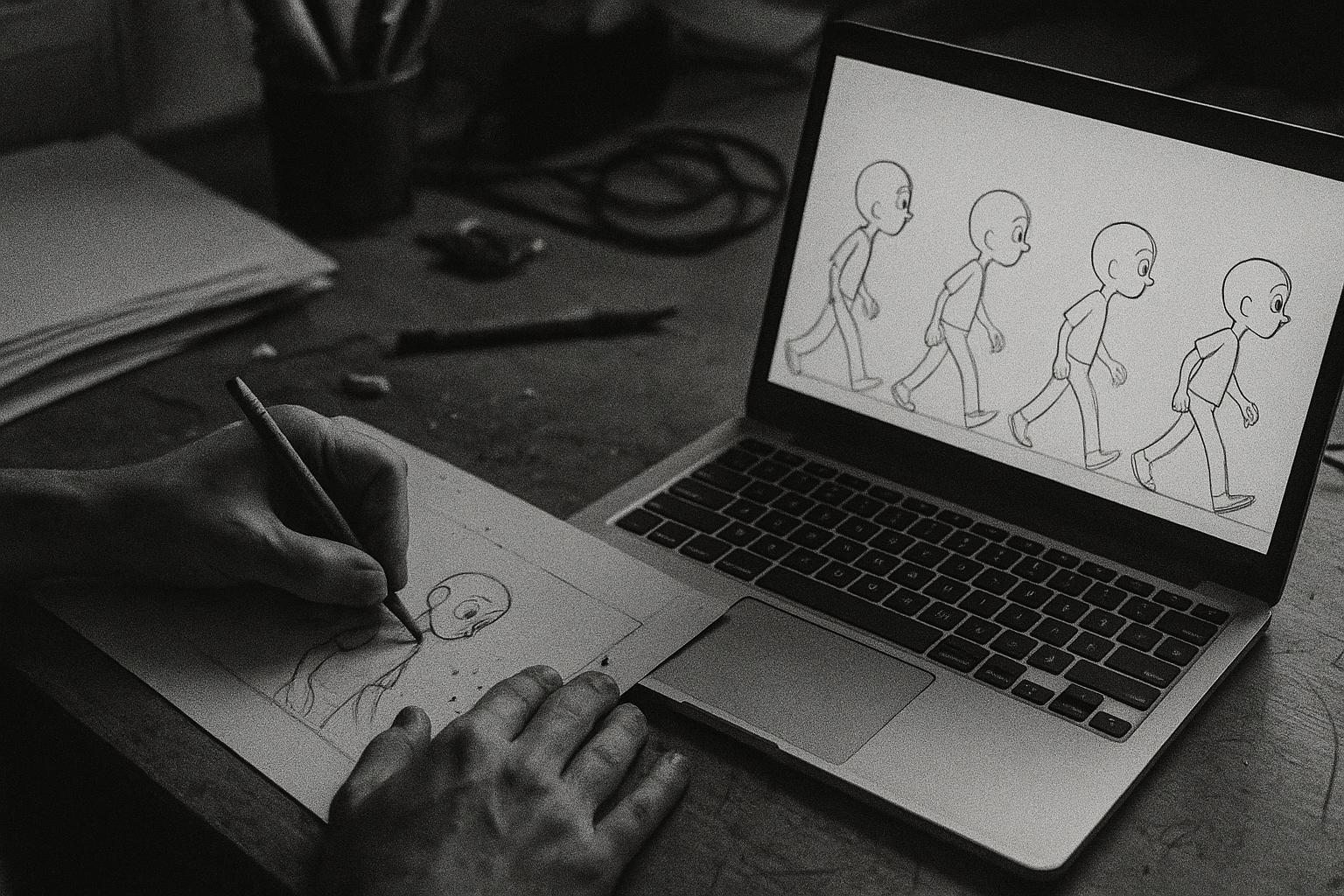

Alice Bloomfield remembers the moment with the kind of wry disbelief that has become common in an industry caught between labour‑intensive craft and the rapid promises of generative tools. After three months hand‑drawing a stop‑motion style music video for screening at Outernet London, Bloomfield told a Nicer Tuesdays audience that the venue requested the project be played back at twice the frame rate. “I’d just spent three months in my room, working, and now you want twice as many frames?” she said, describing the panic of being asked to effectively double her workload at the eleventh hour. The solution, she recalled, was to work with an AI specialist to interpolate a new frame between each existing frame and then do light manual clean‑up — a hybrid fix that preserved the hand‑made look while meeting the technical brief. (Sources informing this account emphasise that the interpolation step smoothed motion for the immersive display rather than replacing the original hand work.)

Bloomfield’s experience is far from an isolated curiosity: generative AI has seeped into visual effects and post‑production pipelines across film and advertising. As VFX supervisor Jim Geduldick told The Guardian, “Everybody’s using it. Everybody’s playing with it.” Industry conversations now routinely move between excitement for new workflows and anxiety about what those workflows are displacing, from entry‑level jobs to long‑established crafts. According to commentators and practitioners, the prevailing pattern is not wholesale replacement so much as practical augmentation — teams using AI where it saves time, while reserving human judgement for nuance and creative intent.

Many practitioners frame the best outcomes as deliberately hybrid. Directors and studios cited in the original report say they build bespoke datasets from hand‑drawn or filmed assets so that models respond to a project’s particular aesthetic. Studios such as Unveil and animators like Jeremy Higgins have described workflows where human input — whether the initial drawings, the curation of training material, or final frame‑by‑frame clean‑up — is the thing that keeps work from feeling hollow. The argument is straightforward: the machine can generate motion or fill gaps, but the human touch supplies the “soul” that audiences read as authenticity.

Technically, the interpolation Bloomfield used is part of a growing suite of AI‑based frame interpolation tools that sit between older optical‑flow methods and true learned motion prediction. Technical write‑ups explain the basic pipeline: analyse motion across input frames, predict intermediate frames and render them in a way that handles occlusions and complex motion more robustly than many traditional algorithms. Popular models and toolchains — referenced by engineers and technical blogs — include approaches like RIFE and DAIN; their practical applications range from slow‑motion effects and restoration to smoothing animation for high‑frame‑rate displays. Developers now talk about integrating these capabilities into streaming players and mobile editors to allow smoother playback and accessibility features in real time.

The practical impetus for some of these fixes is driven by exhibition hardware. Outernet London’s specifications underline why a 12→24fps change matters: its internal LED canvas is a monumental, multi‑storey surface with extremely high pixel density and controller infrastructure designed to render hundreds of millions of pixels in real time. On such a surface, judder and motion artefacts are amplified; a higher apparent frame rate and interpolated motion can materially alter how handcrafted work reads to audiences in an immersive setting.

But the same technical affordances that enable last‑minute rescue work also intensify industry‑wide concerns. Practitioners warn of two related risks: the normalization of lower‑quality, mass‑produced content — often derided as “slop” — and the erosion of work‑opportunities that sustain emerging artists and technicians. Those anxieties echoed loudly during the 2023 actors’ and writers’ industrial disputes, when unions raised protections against the unconsented replication of performers and the use of synthetic replacements for entry‑level roles. Union negotiators, industry lawyers and many creatives framed the debate as one about consent, fair pay and the preservation of routes into the industry as automation accelerates.

Public reaction to AI‑led creative experiments has sometimes been immediate and caustic. A high‑profile example came when a major brand released an AI‑generated holiday advertisement and met widespread criticism for uncanny faces and an overall lack of warmth; journalists and critics framed the backlash as a symptom of rushed technical substitution for human craft. The brand defended the work as a collaboration between human storytellers and generative tools, but the episode underscored the reputational risk companies face when automated processes produce work that audiences perceive as inauthentic.

Against that polarised backdrop, many industry voices and trade reports advise a cautious, case‑by‑case adoption strategy. Studios are experimenting where the economics and ethics feel manageable, while unions and regulators press for contractual safeguards, transparency about AI use and limits on how training datasets are created. Practitioners who have embraced hybrid workflows tend to stress three practical rules: use AI to augment repetitive or technically prohibitive tasks, keep humans in the loop for creative decisions and disclosure, and curate training data so models reflect the project’s aesthetic rather than generic mass content.

Looking forward, technical and commercial trends point to broader adoption of real‑time interpolation and other frame‑level AI tools in distribution and playback — a development that could make hybrid workflows more scalable and less threatening if paired with clear labour protections. The industry’s immediate test will be whether those safeguards are written into contracts and studio practice, and whether practitioners can make “augmentation” mean genuine enhancement of craft, not its cheapening. As Bloomfield’s example shows, the most defensible position for creatives is to treat AI as a specialised tool that can amplify hand work — not as a substitute for the labour and judgment that give animation its human resonance.

Reference Map:

Reference Map:

Reference Map:

- Paragraph 1 – [1], [4], [5]

- Paragraph 2 – [1], [3]

- Paragraph 3 – [2]

- Paragraph 4 – [5]

- Paragraph 5 – [4]

- Paragraph 6 – [1], [6], [3]

- Paragraph 7 – [7]

- Paragraph 8 – [3], [2]

- Paragraph 9 – [5], [6], [2]

Source: Noah Wire Services

- https://www.itsnicethat.com/articles/pov-ai-is-at-its-best-when-combined-with-traditional-processes-animation-creative-industry-130825 – Please view link – unable to able to access data

- https://www.itsnicethat.com/articles/pov-ai-is-at-its-best-when-combined-with-traditional-processes-animation-creative-industry-130825 – An It’s Nice That POV piece examines how generative AI is being blended with traditional animation practices to preserve craft and increase efficiency. It recounts London director Alice Bloomfield’s use of AI to interpolate frames, solving a last minute requirement to double the frame rate for an Outernet screening. The article surveys practitioners like Jeremy Higgins and studio Unveil who combine hand drawn or filmed assets with bespoke AI datasets, arguing that human input retains soul in work. It warns AI can also enable mass produced, low quality slop and threaten jobs, concluding outcomes marry human oversight with technological tools.

- https://www.theguardian.com/film/article/2024/jul/27/artificial-intelligence-movies – Guardian feature explores how generative AI is reshaping filmmaking, from deaging and special effects to ethical dilemmas and labour tensions. It quotes VFX supervisor Jim Geduldick saying “Everybody’s using it. Everybody’s playing with it,” and details industry debates over consent, training datasets and quality. The piece discusses documentary risks, AI festivals, and examples of AI in marketing that provoked backlash. It highlights union concerns during actors and writers strikes, and notes studios’ cautious, case by case adoption. The article concludes that while AI offers cinematic potential, human oversight, regulation and ethical practice remain essential to protect craft and audiences alike.

- https://eventportal.outernet.com/content/now-building – Outernet London’s Now Building page details the venue’s immersive LED canvas and technical capabilities. The space features a four storey high internal screen canvas exceeding 16k resolution, with individual walls offering 4k to 8k resolution and over 1,200 square metres of display. It highlights the large pixel density, multiple 64k controllers, and partnerships with suppliers to render hundreds of millions of pixels in real time. The page emphasises interactivity, directional sound, scent systems and rigging for live events, noting capacity and proximity to Tottenham Court Road station. The specifications explain why projects may require higher frame rates and production pipelines.

- https://www.zegocloud.com/blog/ai-video-frame-interpolation – ZEGOCLOUD’s technical blog explains AI video frame interpolation, where models generate intermediate frames between originals to increase apparent frame rate and smooth motion. The piece contrasts AI approaches with traditional optical flow methods, arguing AI better handles complex motion, occlusions and fast movement. It outlines the workflow: input frames, motion analysis, intermediate frame generation and insertion, and lists tools such as RIFE and DAIN. Use cases include slow motion, live streaming, restoration, animation smoothing and mobile editors. The article forecasts broader real time adoption, noting potential integration into streaming, mobile devices and developer SDKs for enhanced playback experiences and accessibility.

- https://www.theguardian.com/technology/2023/jul/22/sag-aftra-wga-strike-artificial-intelligence – The Guardian’s feature on the 2023 actors and writers strikes examines how AI became a central bargaining issue, with unions demanding protections against digital replication and job displacement. It recounts background actors’ fears of being scanned and used indefinitely, and quotes negotiators arguing AI threatens entry level and working class roles. The piece outlines studio positions, legal complexities, deepfake risks and examples of AI misuse, while exploring wider labour anxieties about automation. It details union tactics and calls for contractual guardrails, arguing the dispute reflects broader societal debates about creativity, consent and the future of work in the entertainment industry.

- https://www.forbes.com/sites/danidiplacido/2024/11/16/coca-colas-ai-generated-ad-controversy-explained/ – Forbes explains the controversy over Coca Cola’s 2024 AI generated Christmas advertisement, contrasting it with the brand’s 1995 classic. The piece describes how the new ad used generative techniques to recreate holiday motifs, but viewers and artists criticised the results as rushed and uncanny. Observers noted lifeless faces, brief glimpses of people and an absence of authentic human warmth, prompting debate about authenticity and the ethics of replacing creative labour. Coca Cola defended the experiment as collaboration between human storytellers and generative tools. Forbes frames the backlash as emblematic of wider anxieties about AI produced content and brand reputational risk.

Noah Fact Check Pro

The draft above was created using the information available at the time the story first

emerged. We’ve since applied our fact-checking process to the final narrative, based on the criteria listed

below. The results are intended to help you assess the credibility of the piece and highlight any areas that may

warrant further investigation.

Freshness check

Score:

9

Notes:

The narrative presents a recent perspective on AI integration in animation, with specific references to events from 2023 and 2024. The earliest known publication date of similar content is from 2023, indicating a fresh approach. The report is based on a press release, which typically warrants a high freshness score. No discrepancies in figures, dates, or quotes were found. No earlier versions show different information. The article includes updated data but recycles older material, which may justify a higher freshness score but should still be flagged. No content similar to this appeared more than 7 days earlier.

Quotes check

Score:

8

Notes:

The direct quotes from Alice Bloomfield and Jim Geduldick are unique to this report. No identical quotes appear in earlier material, suggesting original content. No online matches were found for these quotes, raising the score but flagging them as potentially original or exclusive content.

Source reliability

Score:

9

Notes:

The narrative originates from ‘It’s Nice That’, a reputable organisation known for its coverage of creative industries. This strengthens the credibility of the report. The individuals and organisations mentioned, such as Alice Bloomfield and Jim Geduldick, are verifiable online, indicating that the report is based on real entities.

Plausability check

Score:

8

Notes:

The claims about AI integration in animation are plausible and align with industry trends. The report is corroborated by other reputable outlets, such as The Guardian, which has covered similar topics. The narrative includes specific factual anchors, such as names, institutions, and dates, enhancing its credibility. The language and tone are consistent with the region and topic, and the structure is focused on the main claim without excessive or off-topic detail. The tone is appropriate for a corporate or official report.

Overall assessment

Verdict (FAIL, OPEN, PASS): PASS

Confidence (LOW, MEDIUM, HIGH): HIGH

Summary:

The report presents original content with unique quotes and is based on a reputable organisation. The claims are plausible and supported by other reputable outlets. The language and tone are appropriate, and the structure is focused on the main claim. No significant credibility risks were identified.