As artificial intelligence rapidly reshapes societies worldwide, the race intensifies among nations to establish ethical and effective governance frameworks. Amid technological rivalry and unequal representation, experts warn urgent collaborative action is needed to prevent AI from exacerbating inequality and threatening democratic values.

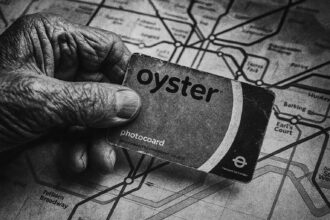

As we forge ahead into the 21st century, the acceleration of artificial intelligence marks one of the most transformative and rapid shifts in our technological landscape. Once a niche pursuit for scientists and science fiction enthusiasts, AI has seamlessly integrated into our everyday lives, influencing everything from employment and communication to warfare and electoral processes. With algorithms dictating news consumption, advertising exposure, and even financial opportunities, a critical question arises: who dictates the rules governing this powerful and dynamic technology?

The swift evolution of AI presents a complex tableau – from sophisticated chatbots to autonomous weapons – its implications span myriad sectors. Governments are increasingly using AI to surveil populations, corporations pursue enhanced productivity through automation, militaries prepare for technologically advanced warfare, and healthcare providers leverage AI for faster diagnoses and treatments. This omnipresence of AI transcends mere convenience; it has become a fulcrum of power. Nations are engaged in a race not only to advance the technology but also to establish governance frameworks that dictate its application and ethical considerations.

This competitive landscape is epitomised by the rivalry between the United States and China, both of which dominate the global AI market due to their vast data resources, computational power, and substantial investments. While the U.S. and its allies reinforce their technological prowess through collaborative treaties—such as the recently signed legally binding international agreement focusing on human rights and democratic values—China continues to pursue aggressive AI strategies, prioritising advancement in military and commercial arenas.

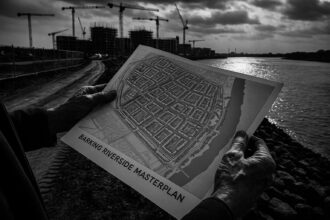

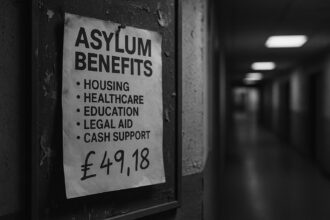

In contrast, although Europe is taking strides with legislative efforts like the EU’s Artificial Intelligence Act, it is a daunting challenge to balance innovation with robust ethical standards. Moreover, the focus on regulatory frameworks in the Global North inadvertently sidelines many nations in Africa, Latin America, and Asia, raising pressing concerns about digital equity. Countries in these regions often find themselves subjected to AI tools designed without their input, risking a new form of digital colonialism. This gap in representation can perpetuate existing inequalities and marginalise those who fall outside the ambit of major tech developments, ultimately stunting their potential for growth and autonomy in a digital world.

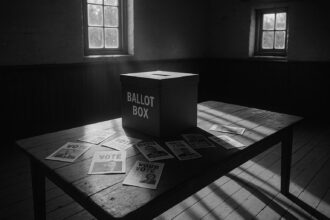

The prevailing landscape of AI governance is reminiscent of a legal grey area, fraught with ambiguity, as there is no universally accepted regime akin to the treaties that regulate nuclear power. Existing guidelines and principles from various organisations, such as the OECD and the World Economic Forum, whilst well-intentioned, lack enforceability and coherence, highlighting an urgent need for clear global standards. In nations where democratic institutions are fragile, AI can exacerbate authoritarianism, as seen in the use of facial recognition technologies for surveillance and social credit systems designed to suppress dissent. Such tools not only threaten individual freedoms but jeopardise the integrity of democratic processes worldwide.

The ethical ramifications of deploying AI in critical areas like military operations exemplify the pressing moral questions we face: Should machines make decisions concerning life and death? Who is held accountable for errors resulting from flawed AI systems? As these questions resonate ever more loudly, the drive for a cohesive international framework grows increasingly imperative. It is crucial that discussions about AI governance not solely reflect the interests of powerful nations but embody a collective responsibility encompassing the diverse perspectives of all stakeholders globally.

The push for a global governance framework should not be left solely to governments. Civil society, educational institutions, and the media play vital roles as guardians of transparency and advocates for privacy rights. Their participation is essential in ensuring that AI applications account for public interests and mitigate potential harms. Interestingly, the very tech companies that have contributed to AI’s proliferation—such as Google, Microsoft, and OpenAI—are required to take part in generating ethical standards. While vigilance is essential to prevent undue corporate influence, their cooperation is also fundamental to forging practical governance pathways.

The international community stands at a pivotal juncture; as AI technologies continue to advance, so too do the risks they pose. Time is of the essence. Without timely and collaborative action, we may default to a reactive stance, where crisis management overshadows proactive governance, risking detrimental outcomes.

Thus, the paramount query transcends whether regulations for AI are warranted; it revolves around our capacity to unite and create these frameworks with courage and determination. This moment in history is crucial, as we harness both the significant promise and the inherent threats of AI. How we navigate this landscape will ultimately shape the fabric of our future, making it essential that the rules governing AI are crafted by a consortium of voices, not merely by those wielding wealth and power, but by humanity in its entirety, for the collective benefit of all.

Reference Map

- Paragraphs 1, 2, 3, 4

- Paragraph 4

- Paragraph 4

- Paragraph 4

- Paragraph 4

- Paragraph 4

- Paragraph 4

Source: Noah Wire Services

- https://www.thestatesman.com/opinion/world-must-join-hands-to-curb-digital-colonialism-1503436197.html – Please view link – unable to able to access data

- https://www.ft.com/content/4052e7fe-7b8a-4c42-baa2-b608ba858df5 – The United States, European Union, and United Kingdom have signed the first legally binding international treaty on artificial intelligence (AI), emphasizing human rights and democratic values. This convention, drafted by over 50 countries, mandates accountability for harmful AI outcomes, insists on respecting equality and privacy rights, and provides legal recourse for AI-related rights violations. The signing aims to prevent fragmented national regulations from stifling innovation and signals a unified international approach to AI regulation, supplementing regional initiatives like the EU’s AI Act.

- https://apnews.com/article/f755788da7d5905fcc2d44edf93c4bec – A United Nations advisory body has stressed the urgent need for global governance of AI, recommending that the UN establish inclusive institutions to regulate the technology. In a detailed report, the group acknowledged AI’s potential to revolutionize fields such as science, healthcare, and energy but warned of its risks if left unregulated. The advisory panel, composed of 39 experts from 33 countries, called for principles rooted in international and human rights law to guide AI governance, including forming an international scientific panel on AI and initiating a global dialogue on AI governance at the UN.

- https://qa.time.com/6308795/yi-zeng-2/ – Yi Zeng, a professor at the Chinese Academy of Sciences, was inspired by the 2001 film A.I. Artificial Intelligence to pursue work in AI, aspiring to create robots with human-like moral capacities. His focus has been on developing ‘brain-inspired intelligence.’ By 2016, Yi grew concerned about AI’s risks and engaged more with policymakers, leading to the formulation of the Beijing AI Principles in 2019. He has actively promoted international cooperation, participating in initiatives like UNESCO’s Ethics of Artificial Intelligence and addressing the UN Security Council. Despite geopolitical tensions and surveillance concerns, Yi emphasizes the need for global collaboration on AI safety and ethics, supported by public sentiment in both the US and China favoring regulatory frameworks.

- https://www.reuters.com/technology/artificial-intelligence/un-advisory-body-makes-seven-recommendations-governing-ai-2024-09-19/ – An artificial intelligence advisory body at the United Nations has issued a final report with seven recommendations to address AI-related risks and governance gaps. Created last year, this 39-member advisory body aims to provide impartial and reliable scientific knowledge about AI, addressing information asymmetries between AI labs and the rest of the world. Key recommendations include the establishment of a global AI standards exchange, a global AI capacity development network, and a new policy dialogue on AI governance. The UN also proposes a global AI fund to address capacity gaps and the creation of a global AI data framework for ensuring transparency and accountability.

- https://time.com/7012779/amandeep-singh-gill/ – Amandeep Singh Gill, the United Nations Secretary General’s Envoy on Technology, is a key figure in AI governance. He is responsible for coordinating digital cooperation among UN member states, the private sector, and academia. Gill highlights the urgent need to update global governance tools to address the rapid development of AI technologies. A 39-member ‘High-Level Advisory Body on Artificial Intelligence’ was formed to provide guidance on global AI governance, aiming to avoid an AI arms race reminiscent of the Cold War. An interim report laid foundational recommendations for global AI governance, including bridging the tech gap between the West and the Global South.

- https://time.com/7178133/international-network-ai-safety-institutes-convening-gina-raimondo-national-security/ – The US has convened the inaugural meeting of the International Network of AI Safety Institutes (AISIs), comprising institutes from nine nations and the European Commission, to address national security concerns related to AI. US Commerce Secretary Gina Raimondo emphasized the need for advancing AI responsibly to avoid endangering people. The meeting, held in San Francisco, focused on managing risks posed by advanced AI technologies. A new task force, Testing Risks of AI for National Security (TRAINS), was also announced to address AI’s national security implications. The event marked a significant step toward international cooperation on AI governance, with upcoming conferences and summits planned to further the cause.