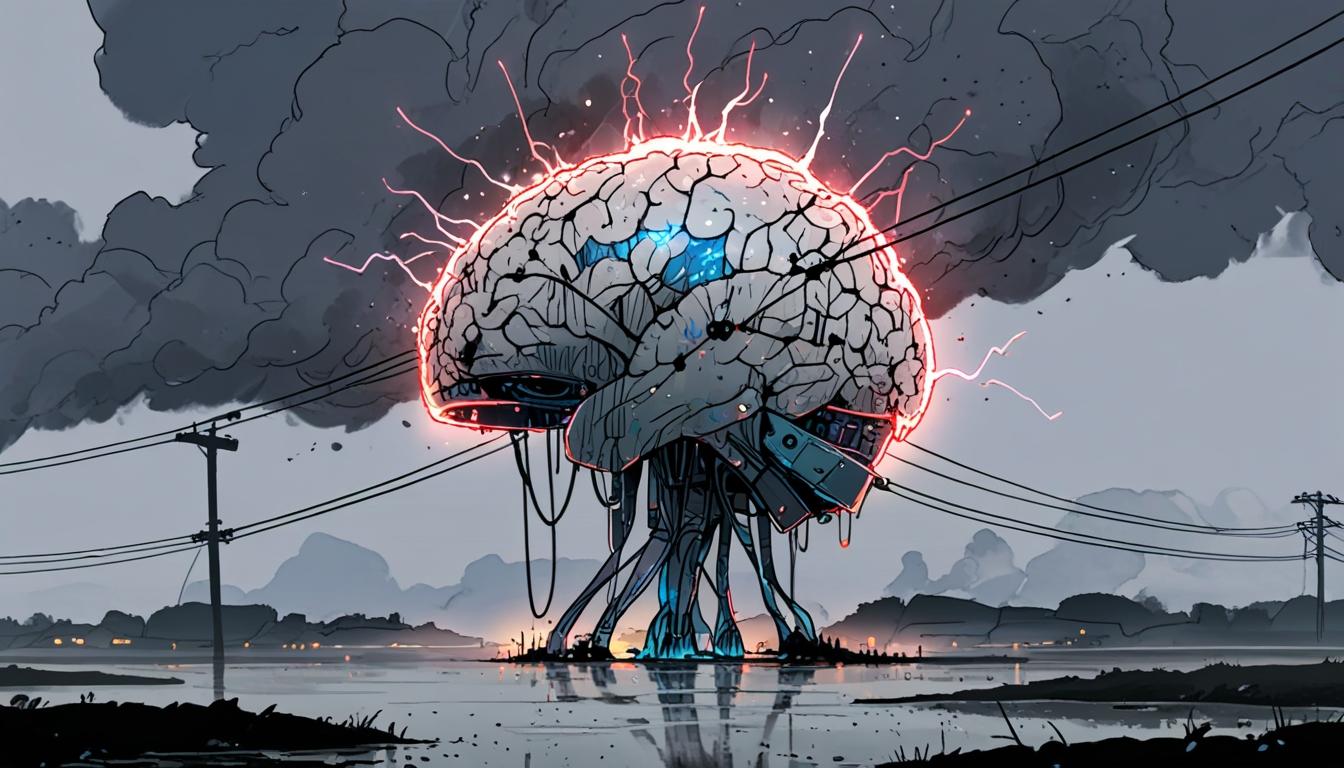

The rise of generative AI technology brings significant environmental costs and ethical dilemmas, prompting urgent discussion about its future.

Artificial Intelligence (AI) has become a prominent component of daily life, similar to the persistent pollen count in Atlanta during spring. However, the widespread use of generative AI raises serious concerns regarding its environmental impact and the ethical implications tied to job displacement and the quality of information produced by these technologies.

A report from nique.net outlines the significant energy and water consumption associated with the training and operation of generative AI models, labelling it an “unregulated beast.” The article indicates that the lack of oversight could lead to generative AI consuming up to 0.5% of the world’s electricity within two years—an estimated usage comparable to the entire electricity consumption of Argentina.

Further highlighting the situation, research from Cornell University predicts that the amount of water required to cool AI data centres might reach half of England’s annual water usage or exceed Denmark’s by four times. The article underscores these figures as alarming, given the increasing urgency of the climate crisis.

The environmental consequences of AI are illustrated through specific models such as GPT-3, which produced approximately 500 tons of carbon dioxide during its training phase. This amount is deemed equivalent to the annual electricity consumption of over 127 American households and over 108 vehicles. Additionally, the training of GPT-3 consumed over 180,000 gallons of water, meeting the daily drinking needs of around 218,000 individuals. The report raises ethical concerns, noting that approximately one in eleven people worldwide lacks sufficient access to clean drinking water, rendering such consumption particularly concerning.

The ethical dilemmas surrounding generative AI extend to the sourcing of training data, which often lacks proper consent. In 2023, the Atlantic reported on Meta’s usage of thousands of pirated works for its Large Language Model training, igniting fears that developers are mining personal online content—including posts from unprotected social media accounts—without permission. This practice has spurred numerous copyright infringement lawsuits in the United States, with more than thirty cases pending against various companies involved in AI development.

Furthermore, the quality of information produced by generative AI raises alarm bells. It has been found that models like GPT-3 can produce incorrect responses up to 25% of the time and incorrectly cite sources up to 60% of the time. The report highlights that using generative AI can require ten times the energy of a standard Google search, adding a further layer of scrutiny regarding its efficiency and reliability.

In summary, as the dialogue surrounding AI advances, the considerations of resource consumption and ethical usage are increasingly vital, with many questioning the merit of relying on technology that may potentially compromise environmental stability and infringe upon users’ rights.

Source: Noah Wire Services

- https://news.mit.edu/2025/explained-generative-ai-environmental-impact-0117 – This MIT News article discusses the substantial energy demands of generative AI models, corroborating the claim about AI’s significant energy consumption and its potential to represent 0.5% of the world’s electricity usage.

- https://impactclimate.mit.edu/2025/01/17/considering-generative-ais-environmental-impact/ – The article emphasizes the serious implications of generative AI on electricity and water resources, aligning with the article’s claim regarding the environmental concerns surrounding AI training and operations.

- https://www.theverge.com/2023/10/10/23911059/ai-climate-impact-google-openai-chatgpt-energy – This source highlights the environmental impact of generative AI, including energy and water consumption, providing evidence for the alarming figures mentioned regarding AI’s resource usage in data centers.

- https://e360.yale.edu/features/artificial-intelligence-climate-energy-emissions – Yale Environment 360 details the carbon emissions produced during the training of AI models like GPT-3, supporting the assertion about AI’s carbon footprint from the training process.

- https://www.theverge.com/features/23764584/ai-artificial-intelligence-data-notation-labor-scale-surge-remotasks-openai-chatbots – This article discusses the labor and ethical concerns related to AI data sourcing, corroborating the claim about copyright issues faced by AI developers due to the use of unconsented materials.

Noah Fact Check Pro

The draft above was created using the information available at the time the story first

emerged. We’ve since applied our fact-checking process to the final narrative, based on the criteria listed

below. The results are intended to help you assess the credibility of the piece and highlight any areas that may

warrant further investigation.

Freshness check

Score:

8

Notes:

The narrative includes specific details about recent years (e.g., 2023) and current concerns regarding AI development. However, it does not mention very recent events or ongoing developments in the field, which slightly reduces its freshness score.

Quotes check

Score:

0

Notes:

There are no direct quotes in the narrative to verify.

Source reliability

Score:

6

Notes:

The narrative references a report from nique.net without specifying its credibility or trustworthiness. It also cites research from Cornell University, which is a reputable institution, but the specific report is not detailed.

Plausability check

Score:

8

Notes:

The claims about AI’s environmental impact and ethical concerns are plausible and align with current discussions. However, specific figures (e.g., energy consumption comparable to Argentina’s) could be verified further for accuracy.

Overall assessment

Verdict (FAIL, OPEN, PASS): OPEN

Confidence (LOW, MEDIUM, HIGH): MEDIUM

Summary:

The narrative highlights significant concerns about AI’s environmental impact and ethical dilemmas, aligning with broader discussions. However, the lack of specific, verifiable sources for some claims and the absence of direct quotes reduce confidence in its overall accuracy.